Update 20.5.2021: Seems to be that just before I published this post Microsoft has published a new GUI option “Export Lists to CSV” according to MC254423. Tested this one as well and it performs smother than the “export to excel”, but still seems to have a limitation of up to 30 000 items.

This post originates from a need to replicate Power Apps application that has some supporting flows and uses Sharepoint lists as database. I was hoping to find GUI based easy way to export/ import the application. That functionality exists for the application itself (Export and import canvas app packages). Similarly you can export Sharepoint lists one by – but it has quite many limitations. Exporting a list with a lot of items ended up crashing my excel (part of the export process). Then importing from Excel is limited to 20 000 rows. Also text type columns have length limitations. I had to do it another way.

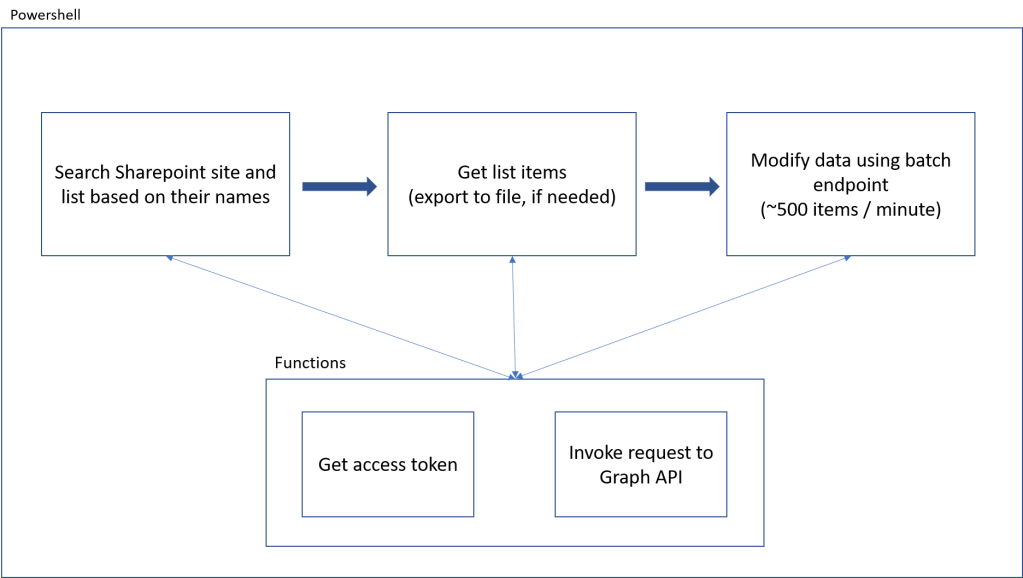

So I found myself with a task, that could be accomplished by calling GraphAPI using Powershell. The biggest of my Sharepoint lists had hundreds of thousands of items. This kind of volume meant, that for the first time, I was to encounter throttling. Throttling means that if you use too much resources you get reply with instructions to have a pause for some random time (based on my observations something like 30 to 350 seconds). Exporting happens in pages of 200 items and thus is quite fast. Importing on the other hand happens by default on-by-one that means extremely long run time.

Batch API makes it possible to gather a batch of requests and send them in one action. I started by reading this excellent blog post by Michal Sacewicz, whos script I have used as a starting point for my use case. Currently you can combine up to 20 requests in one batch.

What makes things interesting is that some of the requests in one batch can be fulfilled while in the same time others might end up being throttled. This means that running this kind of volume through batch API you need to keep attention to result of every individual request in each batch. When some of the requests are throttled you should resend only those, at least when creating items. Running my script, throttling looks like this:

Throttling on 6900 for 54 seconds. 2 items waiting.

Throttling on 6900 for 303 seconds. 1 items waiting.

Processed 6900 of 307353

Throttling on 6920 for 314 seconds. 1 items waiting.

...

Throttling on 85220 for 45 seconds. 1 items waiting.

Processed 85220 of 307353

Processed 85240 of 307353

Throttling on 85260 for 54 seconds. 2 items waiting.

Processed 85260 of 307353

Throttling on 85280 for 324 seconds. 3 items waiting.

Processed 85280 of 307353

Throttling on 85300 for 329 seconds. 4 items waiting.Even using the batch API the import run is going to take for a long time. That means your access token is going to expire at some point (after its validity of one hour). That means you will have to handle also refreshing the token.

Michal wrote about processing 1000 items under a minute. With my volumes I got processed something like 500 items per minute. I didn’t do a comparison without batching (with full data) but I assume it would be much slower.

The following script is actually further developed after exporting and importing those lists. I needed to also manipulate the data a little bit later on, so this is one example of the scripts I ended up using for this exercise.

First I declare a couple of functions to support rest of the script

# If something starts going wrong, I prefer to stop

$ErrorActionPreference = "Stop"

# Variables used for finding your list

$SPSite = "YourSite"

$SPList = "YourList"

Connect-PnPOnline -Url "https://YourSite.sharepoint.com/"

$Header = $()

#Function for getting initial or new access token

function Get-AuthHeader {

$Token = Get-PnPGraphAccessToken -Decoded

$Header = @{

"access" = "Bearer $($Token.rawData)";

"Content-Type" = "application/json; charset=utf-8";

}

return $Header

}

#Function for requests agains Sharepoint API. Includes handling pagination and expired access token.

function Invoke-Restrequest {

Param

(

[Parameter(Mandatory)]

$Uri,

$Header,

[Parameter(Mandatory)]

$Method,

$Body

)

$Retry = 0

$Response = @()

$NextPage = ""

while($Retry -lt 2) {

try {

$ResponseInitial = Invoke-RestMethod -Uri $Uri -Headers $Header -Method $Method -ContentType "application/json" -Body $Body

# Batch API is only used for PATCH/PUT methods and at least here there is no need for handling pagination

if($Uri -eq 'https://graph.microsoft.com/v1.0/$batch') {

return $ResponseInitial

}

# Logic for reading additional pages

$Response = $ResponseInitial.Value

$NextPage = $ResponseInitial.'@Odata.NextLink'

$Page = 2

While($Null -ne $NextPage) {

Write-Host "Reading paged response, page $Page"

$ResponseInitial = Invoke-RestMethod -Uri $NextPage -Headers $Header -Method $Method -ContentType "application/json"

$Response += $ResponseInitial.Value

$NextPage = $ResponseInitial.'@Odata.NextLink'

$Page++

}

return $Response

}

catch {

# Handle missing or expired access token

if($_.Exception.Response.statuscode -eq "Unauthorized" -or $_.Exception.Response.statuscode -eq "InvalidAuthenticationToken") {

$script:Header = Get-AuthHeader

$Header = $script:Header

}

#Hadling missing or expired accesstoken for individual items while using batch API

elseif($_.Exception.Response.statuscode -eq "BadRequest") {

return "BadRequest"

}

$Retry++

}

}

}Then I find the site and the list I wanted. I can then export list items. It is then also possible to write export to a file, for example JSON. For actually exporting the lists I used another version that looped through all the lists on a specific site and exported each of them to individual files.

# Get all sites and select site based on the name provided by SPSite variable

$Uri = "https://graph.microsoft.com/v1.0/sites?search=*"

$Sites = Invoke-Restrequest -Uri $Uri -Header $Header -Method Get

$TargetSite = $Sites | Where-Object {$_.webUrl -like $SPSite}

# Get list of lists and select based on the name provided by SPList variable

$Uri = "https://graph.microsoft.com/v1.0/sites/$($TargetSite.id)/lists"

$SiteLists = Invoke-Restrequest -Uri $Uri -Header $Header -Method Get

$TargetList = $SiteLists | Where-Object {$_.displayname -eq $SPList}

# Get list items

$Uri = "https://graph.microsoft.com/v1.0/sites/$($TargetSite.id)/lists/$($TargetList.id)/items?expand=items"

$TargetListItems = Invoke-Restrequest -Uri $Uri -Header $Header -Method Get

# If needed, items on the list can be exported here

# $TargetListItems | ConvertTo-Json -Depth 4 | Out-File -FilePath "YourFile.json" -Encoding UTF8 -NoClobberThen its time to actually update each item on the list.

# Update logic

$TotalItems = $TargetListItems.Count

$I = 0

$ITotal = 0

$BatchSize = 20

$Requests = @()

:outer foreach($Item in $TargetListItems[$ITotal..(($TargetListItems.count)-1)]) {

$I++

$ITotal++

# Set the colums you want to update an their values

$Body = @{

"YourColumnName"="YourValue";

}

# Build POST request for the current item and add to $Requests array

$Request = @{

id = $i

method = "PATCH"

url = "/sites/$($TargetSite.id)/lists/$($TargetList.id)/items/$($Item.id)/fields"

body = $Body

headers = $header

}

$Requests += $Request

# If batch (array) contains specified number of items or if it's the last item from import

if ($I -eq $BatchSize -or $ITotal -eq $TotalItems) {

$Retry = 0

$Success = $false

# While loop to hande possible item level issues with expired or missing access tokens within a batch request

while($Retry -lt 2 -and $Success -eq $false) {

# In case of item level issues with expired or missing access tokens within a batch request, rewrite header for each item remaining to process

if($Retry -gt 0) {

foreach($Object in $Requests) {

$Object.headers = $Script:Header

}

}

$BatchRequests = @{

requests = $Requests

}

$BatchBody = $BatchRequests | ConvertTo-Json -Depth 4

# Send batch request

$RetryLimit = 3

$RetryCounter = 0

Do {

$BatchRequestResponse = Invoke-Restrequest -Method Post -Uri 'https://graph.microsoft.com/v1.0/$batch' -Header $Header -Body $batchBody

# In case of item level issues with expired or missing access tokens within a batch request, rewrite header for each item remaining to process

# by running while loop again

if($BatchRequestResponse -eq "BadRequest") {

$Retry++

break

}

# Handling throttling of individual items. Find longest throttle time and use it for all remaining items.

$Status = $BatchRequestResponse.responses | Select-Object status -Unique

if($Status.status -contains "429") {

$Retryafter = $BatchRequestResponse.responses.headers."retry-after" | Sort-Object | Select-Object -Last 1

$BatchRequestsTemp = @()

foreach($Request in $BatchRequests.requests) {

if(($BatchRequestResponse.responses | Where-Object {$_.id -eq $($Request.id)}).status -notin 200,201) {

$BatchRequestsTemp += $Request

}

}

$BatchRequests = @{

requests = $BatchRequestsTemp

}

$BatchBody = $BatchRequests | ConvertTo-Json -Depth 4

write-host "Throttling on $ITotal for $Retryafter seconds. $($BatchRequests.requests.count) items waiting."

Start-Sleep -seconds ([int]$Retryafter + 5)

}

# Successful batch

elseif((($Status.status).count -eq 1) -and ($Status.status -in 200,201)) {

write-host "Processed $ITotal of $($TargetListItems.count)"

$BatchRequestResponse.responses.status | Select-Object -Unique

$Success = $True

}

# Other temporary errors, retry within retry limit

else {

$RetryCounter++

if($RetryCounter -eq $RetryLimit) {

Write-Host "Error $($Status.status)"

Break outer

}

Write-Host "Error $($Status.status), retry $RetryCounter"

Start-Sleep -Seconds 3

$BatchRequestsTemp = @()

foreach($Request in $BatchRequests.requests) {

if(($BatchRequestResponse.responses | Where-Object {$_.id -eq $($Request.id)}).status -notin 200,201) {

$BatchRequestsTemp += $Request

}

}

$BatchRequests = @{

requests = $BatchRequestsTemp

}

$BatchBody = $BatchRequests | ConvertTo-Json -Depth 4

}

} until ($Success)

}

# Reset batch item counter and requests array

$I = 0

$Requests = @()

}

}

Very good use case with nicely handled throttling. Thanks for sharing!

LikeLike

Thanks! Your blog was definitely helpful for me. Hopefully I can help someone as well by sharing this.

LikeLike

i tried using this it was a great help but it on export 200 records. what to do for more than 200 records how to export ?

LikeLike

Thanks for your question! That is exactly what these script example were about to help with. That Invoke-Restrequest contains logic to handle that paging. So if you’re getting actual data, I would have expected you to get all the items. I haven’t been using this lately and I have no chance to test if it still works as it did at the time of writing this. Sounds to me like you’re only getting the first page, but I might not able to help you further. Sorry!

LikeLike